Introduction

Welcome back. Previously we introduced ourselves to Ansible and Batfish, along with installing the necessary components. We will now look at an example topology, and also dive into the different Ansible modules that the Batfish role provides us.

Note: The code for this guide can be found within the following repo: https://github.com/rickdonato/batfish-ansible-dual-tier-fw-demo

Topology

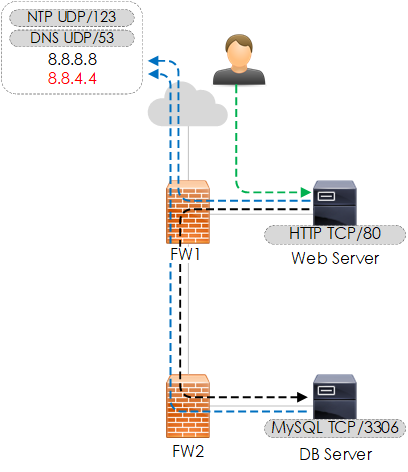

For the context of this article, our example network will be based on the following topology. Here are the key points about the topology:

- Dual firewall topology.

- Firewalls are based on Cisco ASA.

- Public client connects to webserver.

- Webserver connects to DB server.

- Both the web and db servers require access to NTP and DNS.

- Our firewall change will involve adding a new NTP/DNS IP -

8.8.4.4. - This environment is provided to you within the repo via a VIRL topology file -

topology.virl. - To create the environment run the command

make start-virl-network. Edit theMakefileto edit your VIRL IP and credentials.

Figure 1 - Topology Flows.

Create Snapshot

Playbook: create_bf_snapshot.yml

First of all we will need to create a Batfish snapshot, by running the create_bf_snapshot.yml playbook located within the previously cloned git repo. Like so:

cd ansible

ansible-playbook playbooks/create_bf_snapshot.yml

Once complete your snapshot will be located within ./ansible/data/snapshots. As shown below:

root@DESKTOP-AC9NU22:~/batfish-ansible-dual-tier-fw-demo# tree

ansible/data/snapshots/

└── snapshot

├── configs

│ ├── fw1-asa.cfg

│ └── fw2-asa.cfg

├── hosts

│ ├── db.json

│ └── websrv.json

└── iptables

├── db.iptables

└── websrv.iptables

Initialize/Validate Snapshot

Initialize

Playbook: bf_init_snapshot.yml

Now that we have created our snapshot we can look to initialize our snapshot. Or in other words upload our snapshot to the Batfish service, so that Batfish can render the necessary models which we can then query later.

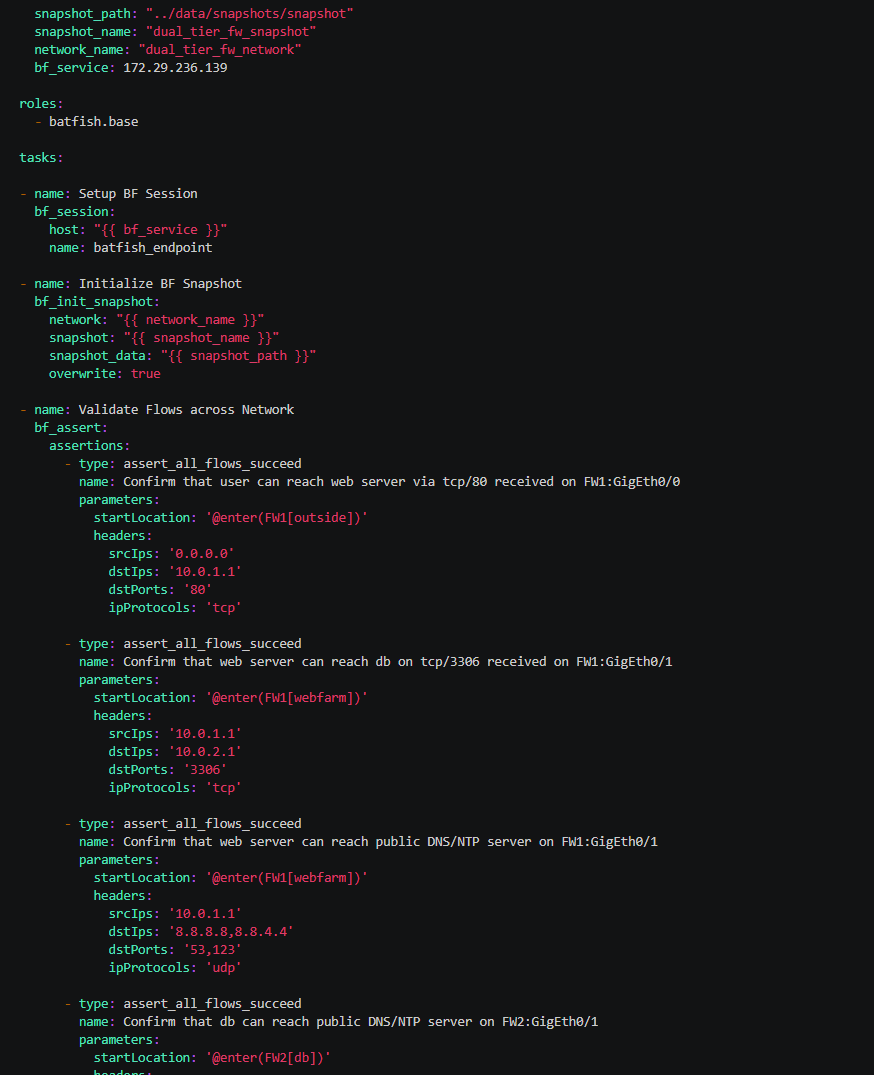

To initialize our snapshot within our Ansible playbooks, we use the bf_session and bf_init_snapshot modules from our batfish role. As shown below.

---

- name: Initialize Network and Snapshot

hosts: localhost

gather_facts: no

vars:

snapshot_path: "../data/snapshots/snapshot"

snapshot_name: "dual_tier_fw_snapshot"

network_name: "dual_tier_fw_network"

roles:

- batfish.base

tasks:

- name: Setup BF Session

bf_session:

host: "{{ bf_service }}"

name: batfish_endpoint

- name: Initialize BF Snapshot

bf_init_snapshot:

network: "{{ network_name }}"

snapshot: "{{ snapshot_name }}"

snapshot_data: "{{ snapshot_path }}"

overwrite: true

Validate

Playbook: bf_validate_snapshot.yml

Once Batfish has initialized the snapshot we need to validate how successful Batfish has been at parsing the data. It is also worth noting that during the snapshot initialization, if Batfish encounters any issues then a warning is shown. Like so:

TASK [Initialize BF Snapshot] ********************************************************************************************************************************

[WARNING]: Your snapshot was successfully initialized but Batfish failed to fully recognized some lines in one or more input files. Some unrecognized configuration lines are not uncommon for new networks, and it is often fine to proceed with further analysis. You can help the Batfish developers improve support for your network by running the bf_upload_diagnostics module: https://github.com/batfish/ansible/blob/master/docs/bf_upload_diagnostics.rst

To confirm the problematic configuration lines, we can use the bf_upload_diagnostics module with dry_run set to true.

- name: Generate Snapshot Diag Info & Save Locally

bf_upload_diagnostics:

network: "{{ network_name }}"

snapshot: "{{ snapshot_name }}"

dry_run: true

register: diag_data

By setting dry_run to true the snapshot diagnostic data is saved locally to /tmp. Like so:

# ls -l /tmp/tmprlssh90c/

total 16

-rw-r--r-- 1 root root 3760 Dec 3 23:37 __fileParseStatus

-rw-r--r-- 1 root root 2623 Dec 3 23:37 __initInfo

-rw-r--r-- 1 root root 4417 Dec 3 23:37 __initIssues

-rw-r--r-- 1 root root 2 Dec 3 23:37 metadata

To extract the configuration lines Batfish has encountered issues with we need to look into __initIssues. Within our playbook this logic is performed by loading the file via the lookup plugin. The output is then rendered to json via the from_json filter, and the required data obtained by using get against the appropriate keys. Like so,

- name: Display Problematic Lines

vars:

diag_path : '{{ diag_data.summary | regex_replace("^.*:\s", "") }}/__initIssues'

debug:

msg="{{ (lookup('file', diag_path) | from_json).get('answerElements')[0].get('rows') }}"

Based upon our example we get the following results.

TASK [Display Problematic Lines] ***************************************************************************************

ok: [localhost] => {

"msg": [

{

"Details": "This syntax is unrecognized",

"Line_Text": "aaa authentication login-history",

"Nodes": null,

"Parser_Context": "[aaa_authentication s_aaa stanza cisco_configuration]",

"Source_Lines": [

{

"filename": "configs/fw2-asa.cfg",

"lines": [

83

]

},

{

"filename": "configs/fw1-asa.cfg",

"lines": [

94

]

}

],

"Type": "Parse warning"

},

...

Therefore, as the issue seen is based upon the configuration aaa authentication login-history, we can safely ignore as this will not present any issues to the validation of our rule sets.

Gathering/Validating Facts

Playbook: bf_gather_validate_facts.yml

There are two modules provided for facts.These modules are:

bf_extract_facts- Extracts and returns facts for a Batfish snapshot and saves them (one YAML file per node) to the output directory if specified.bf_validate_facts- Validates facts for the current Batfish snapshot against the facts in theexpected_factsdirectory.

To summarize, if we want to just collect facts from our devices we use bf_extract_facts. However, if we want to validate our facts we use bf_validate_facts, which will pull the facts from the Batfish snapshot and validated against what is in the expected_facts directory.

Below shows their use from within the playbook .

- name: Retrieve BF Facts

bf_extract_facts:

output_directory: ../data/bf_facts/extract

- name: Validate facts gathered by BF

bf_validate_facts:

expected_facts: ../data/bf_facts/expected

register: bf_validate

ignore_errors: true

- name: Display BF validation result details

debug: msg="{{ bf_validate }}"

To show a quick demo of fact validation I have added an NTP server to the expected facts directory for FW1. When we run the playbook we receive our validation results:

TASK [Display BF validation result details] ******************************************************************************************************************

ok: [localhost] => {

"msg": {

"changed": false,

"failed": true,

"msg": "Validation failed for the following nodes: ['fw1'].",

"result": {

"fw1": {

"NTP.NTP_Servers": {

"actual": [],

"expected": [

"8.8.8.8"

]

}

}

},

"summary": "Validation failed for the following nodes: ['fw1']."

}

}

Assertion

Playbook: bf_validate_acls_flows.yml

Another key module provided via the Batfish role is bf_assertion. As per the Batfish documentation, bf_assertion:

Makes assertions about the contents and/or behavior of a Batfish snapshot.

There are a number of batfish assertions available (found here) however for the context of this article we will look into and use:

assert_filter_has_no_unreachable_lines

The assertion assert_filter_has_no_unreachable_lines validates that each entry (filter line) in the ACL is reachable. An unreachable line is an entry that would never be hit due to an entry before it. If you take the ACL below as an example. The 2nd line would never be hit due to the deny any any and therefore deemed unreachable.

access-list acl-inside extended deny ip any4 any4

access-list acl-inside extended permit udp host 10.0.2.1 host 8.8.8.8 eq domain

In addition when using this assertion we also provide the filter name of the node. Below shows an example of how this assertion looks within a Playbook.

- name: Validate ACLs

bf_assert:

assertions:

- type: assert_filter_has_no_unreachable_lines

name: Confirm that there are no unreachable lines

parameters:

filters: 'FW1["acl-outside", "acl-webfarm", "acl-inside"], FW2["acl-outside", "acl-db"]'

assert_all_flows_succeed

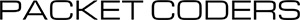

The assertion assert_all_flows_succeed validates that a flow based on a startLocation and a set of header attributes (such as source IP, destination port etc) can successfully reach its destination. Below shows an example:

- name: Validate Flows across Network

bf_assert:

assertions:

- type: assert_all_flows_succeed

name: Confirm that user can reach web server via tcp/80 received on FW1:GigEth0/0

parameters:

startLocation: '@enter(FW1[outside])'

headers:

srcIps: '0.0.0.0'

dstIps: '10.0.1.1'

dstPorts: '80'

ipProtocols: 'tcp'

Coming Up

Next up, we will look into a workflow that will encompass the various steps and modules covered.