Overview

Not all of us Network Engineers have a dedicated network configuration backup tool for various reasons. Paid solutions like Orion are excellent but come with a high cost. There are solid open-source alternatives like Rancid or Oxidized, but setting them up can be a bit complex. Or maybe you just want something you have full control over, allowing you to tweak it as needed in the future.

In this blog post, we'll look at how to use Ansible to back up network configurations - a solution you might even call a 'Poor Man's NCM'!

Prerequisites and Tools of Choice

In this example, we'll use a few Cisco devices and a couple of Arista devices to demonstrate how to structure an Ansible environment in a multi-vendor environment. Here are the high-level steps required to back up the configs using Ansible and push them to Git.

- Ansible will connect to the devices and back up the configurations.

- Save a copy to the local git repository.

- Commit and push the changes to Gitlab

- To schedule the Ansible playbooks, we'll use Linux's built-in cron job.

This blog post assumes you're somewhat familiar with Ansible and Git, but even if you're new, you can still follow along. If you want to learn the basics of Ansible, feel free to check out our previous Ansible introductory post linked below.

Why Git?

Before we proceed, let's take a moment to understand what Git is and why Git is useful for this use case.

Git is a version control system that helps track changes to files over time. It allows you to store, manage, and retrieve previous versions of files, making it an excellent tool for maintaining network configuration backups.

Without Git, you'd have to manually manage multiple versions of configuration files. If you're backing up hundreds of devices and keeping copies for a few months, you'll quickly end up with thousands of files. Tracking changes between versions would be messy and inefficient.

Using Git solves this problem by allowing us to track configuration changes efficiently. Each day, we'll back up the latest configurations and commit them to a Git repository. This way, we can always revert to a previous version and use Git's built-in tools to compare changes over time.

In Git, we use a few key commands.

git addstages files for commit, telling Git to track changes in those files. It doesn’t actually save the changes to the repository yet, it just marks them to be included in the next commit. You can add specific files, or usegit add .to stage everything that has changed.git commit -m "message"saves the staged changes to your local Git repository. The message should describe what was changed, so you can refer back to it later. This creates a snapshot of your project at that point in time.git pushuploads your local commits to a remote repository like GitLab or GitHub. This makes your changes available to others (or to yourself from another machine) and keeps the remote repo up to date.

For this example, we'll use GitLab to store our configuration backups. GitLab is a web-based Git repository that allows teams to collaborate on code and track changes. However, you can use any other remote Git service, such as GitHub or Bitbucket, to store your backups.

GitLab Setup

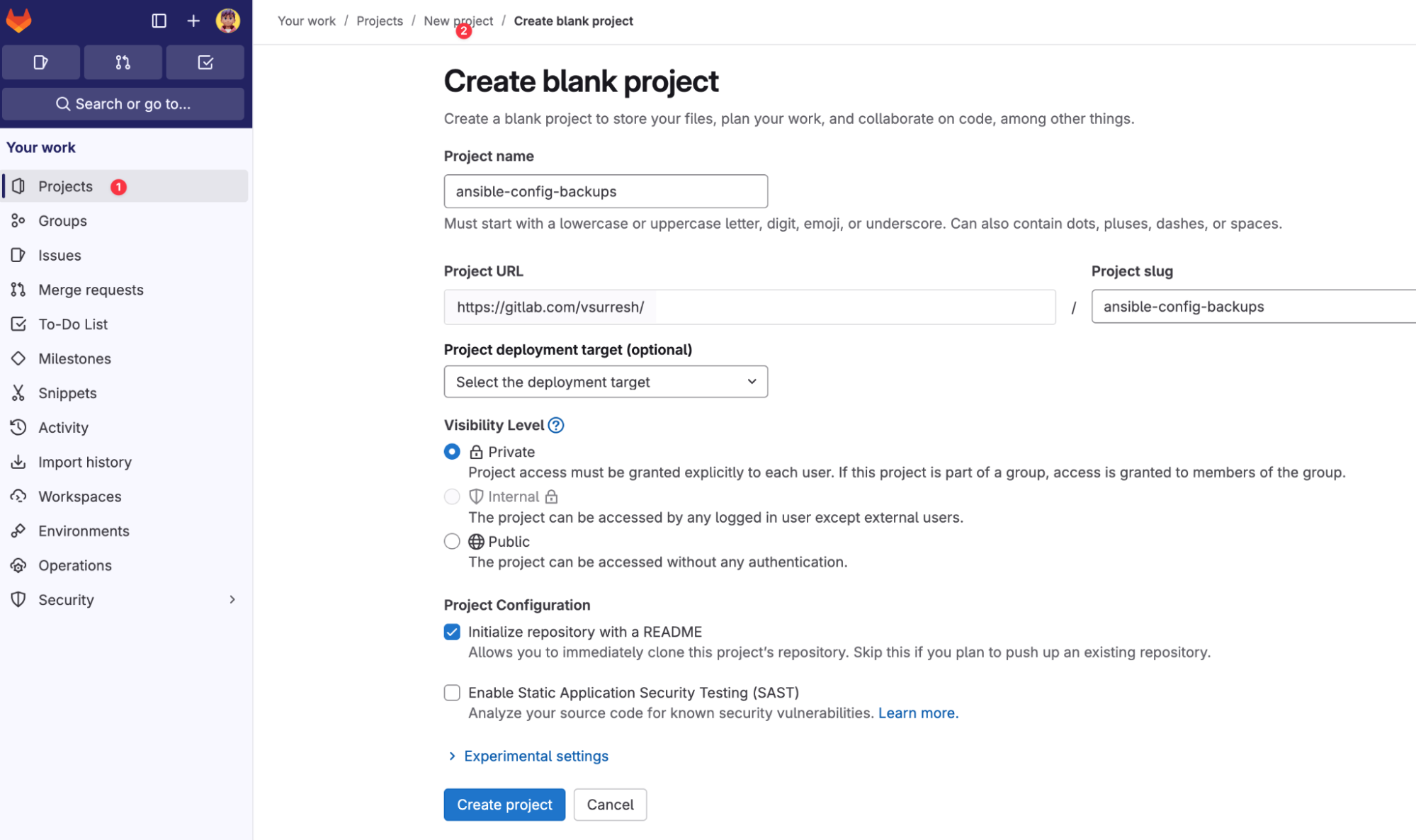

As mentioned earlier, you can use any remote Git repository for storing your backups. Some organizations even have internal Git servers for this purpose. In this example, we'll use GitLab to create a repository for storing network configuration backups.

The first step is to create a new project in GitLab. A project is where your code and files live, it acts as a central place to store, manage, and track changes to your files. In our case, we need a project to store the network configuration backups in a version-controlled way.

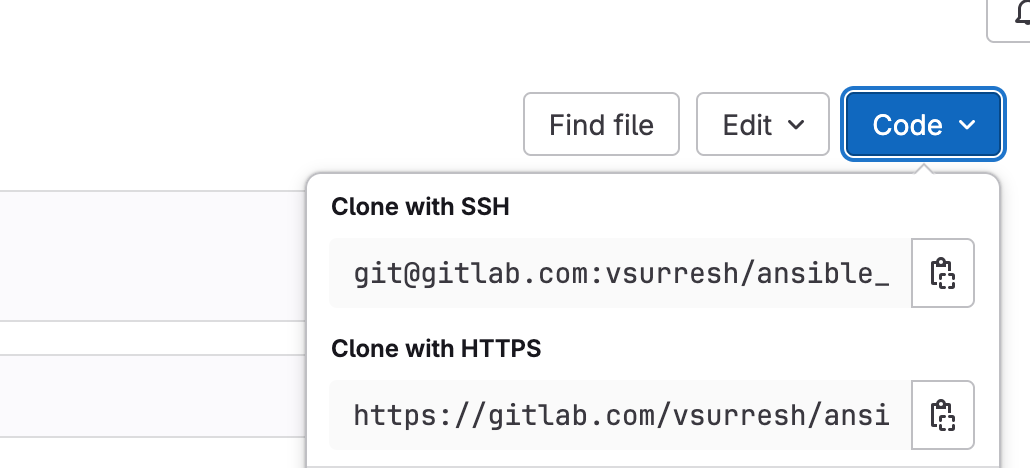

Once the project is set up, you'll need to clone it onto the server where Ansible will run the playbook. This creates a local copy of the repository, including all its files and history, so you can start working with it. Throughout this example, we will use a repo called ansible_config_backups.

You don't necessarily need to clone the repo manually, as the playbook includes a task that will clone it for you. Either way works fine.

In our Ansible playbook, we'll specify the path to this local Git repository and tell Ansible where to save the configuration files. Once the backups are created, we'll use Git to commit and push them to GitLab, ensuring we have a versioned history of all changes.

Ansible Setup

Now that we have our Git repository ready, let’s go over the Ansible structure for backing up network configurations. We’ve organized the files in a way that makes it easy to manage and update.

We're not going into too much detail here since we've covered this extensively in a previous post. If you want to learn more about the basics, feel free to check that out.

.

├── ansible.cfg

├── backup.yml

└── inventory

├── group_vars

│ ├── all.yml

│ ├── arista_server_switches.yml

│ └── cisco_access.yml

├── host_vars

└── hostfile.yml

4 directories, 6 files

Ansible Configuration File

The ansible.cfg file disables host key checking and sets the default inventory file location to /home/suresh/pc_resources/inventory/hostfile.yml, so Ansible knows where to find the inventory file.

[defaults]

host_key_checking = False

inventory = /Users/bob/ansible_code/inventory/hostfile.yml

Inventory and Group Variables

The inventory is structured to handle multiple vendors. We have a hostfile.yml that categorizes devices into two groups.

hostfile.yml

all:

children:

cisco_access:

hosts:

core-01:

ansible_host: 192.168.100.210

core-02:

ansible_host: 192.168.100.211

access-01:

ansible_host: 192.168.100.212

access-02:

ansible_host: 192.168.100.213

arista_server_switches:

hosts:

server-sw-01:

ansible_host: 192.168.100.101

server-sw-02:

ansible_host: 192.168.100.102

cisco_access- Contains Cisco Access Switches.arista_server_switches- Contains Arista Server Switches.

Each group has an associated group_vars file that defines Ansible-specific settings like connection methods, authentication details, and vendor type. This ensures that the correct module and credentials are used when connecting to each device.

**Note - **In a production environment, never store credentials in plain text. Always use secure methods like Ansible Vault to encrypt sensitive information and keep your configurations secure.

cisco_access.yml

---

ansible_connection: ansible.netcommon.network_cli

ansible_network_os: cisco.ios.ios

ansible_become: yes

ansible_become_method: enable

ansible_user: admin

ansible_password: admin

ansible_become_password: admin

vendor: cisco

arista_server_switches.yml

---

ansible_connection: ansible.netcommon.network_cli

ansible_network_os: arista.eos.eos

ansible_become: yes

ansible_become_method: enable

ansible_user: admin

ansible_password: admin

ansible_become_password: admin

vendor: arista

Please note that the vendor variable defined here is something we will use later. When we take the backups, we will group the backups based on the vendor. If you prefer to group them by site or platform, feel free to add additional variables here.

all.yml

---

git_repo_path: "/Users/bob/ansible_config_backups"

We’ll use group_vars/all.yml to define variables that are common to all hosts in the inventory. This helps keep things organized and makes the playbook easier to manage. In our case, we'll use it to specify the local path to the Git repository where the backup files will be stored.

Main Playbook

This is our main playbook, and we’ve structured it into three main parts. First, we clone the Git repository. If the repo is already cloned, the playbook will still run without any issues. The second part backs up the device configurations and saves them in the repo directory. Finally, we commit and push the changes to the remote repository, which is GitLab in this example.

backup.yml

---

- name: Clone Git Repo

hosts: localhost

connection: local

tasks:

- name: Checkout Git Repo

ansible.builtin.git:

repo: 'git@gitlab.com:vsurresh/ansible_config_backups.git'

dest: "{{ git_repo_path }}"

clone: yes

update: yes

force: yes

- name: Backup Network Device Configs

hosts: all

gather_facts: no

tasks:

- name: Cisco Config Backup

cisco.ios.ios_config:

backup: true

backup_options:

filename: "{{ inventory_hostname }}.cfg"

dir_path: "{{ git_repo_path }}/{{ vendor }}"

when: vendor == 'cisco'

- name: Arista Config Backup

arista.eos.eos_config:

backup: true

backup_options:

filename: "{{ inventory_hostname }}.cfg"

dir_path: "{{ git_repo_path }}/{{ vendor }}"

when: vendor == 'arista'

- name: Git Commit and Push

hosts: localhost

connection: local

tasks:

- name: Set timestamp

set_fact:

timestamp: "{{ lookup('pipe', 'date +%Y-%m-%d_%H:%M:%S') }}"

delegate_to: localhost

run_once: yes

- name: git commands

shell: |

git add .

git commit -m "Device Backup on {{timestamp}} "

git push

args:

chdir: "{{ git_repo_path }}"

delegate_to: localhost

run_once: yes

The playbook begins by cloning the Git repo locally using the ansible.builtin.git module. The repo URL is defined in the repo parameter, and it’s cloned to the location specified by {{ git_repo_path }} (Remember we added this in our group_vars/all.yml file). This ensures that the latest version of the repository is available before proceeding with any backups.

Once the repo is cloned, the playbook connects to all devices defined in the inventory and performs a configuration backup. It uses conditional logic to determine the device vendor. If the device is,

- Cisco (

when: vendor == 'cisco'), it uses thecisco.ios.ios_configmodule - Arista (

when: vendor == 'arista'), it uses thearista.eos.eos_configmodule.

In both cases, backups are stored under {{ git_repo_path }}/{{ vendor }} with the filename matching the device's hostname, e.g. router1.cfg

After all device configurations are backed up, the playbook returns to the control host to perform Git operations.

- It first sets a timestamp using

set_factandlookup('pipe', 'date +%Y-%m-%d_%H:%M:%S'). - Then, within the Git repo directory, it runs a shell task to add all changes (

git add .), commit them with a message like"Device Backup on 2024-03-19_10:30:00"and push to the remote repo. - This ensures that only actual changes are tracked, and if a device’s config hasn’t changed, Git won’t create a new commit.

Please note that we've used absolute paths instead of relative paths. I recommend doing it this way because when running Ansible from a cron job, the working directory isn't always the same. Using absolute paths ensures that Ansible and Git commands work reliably, no matter where the script runs from.

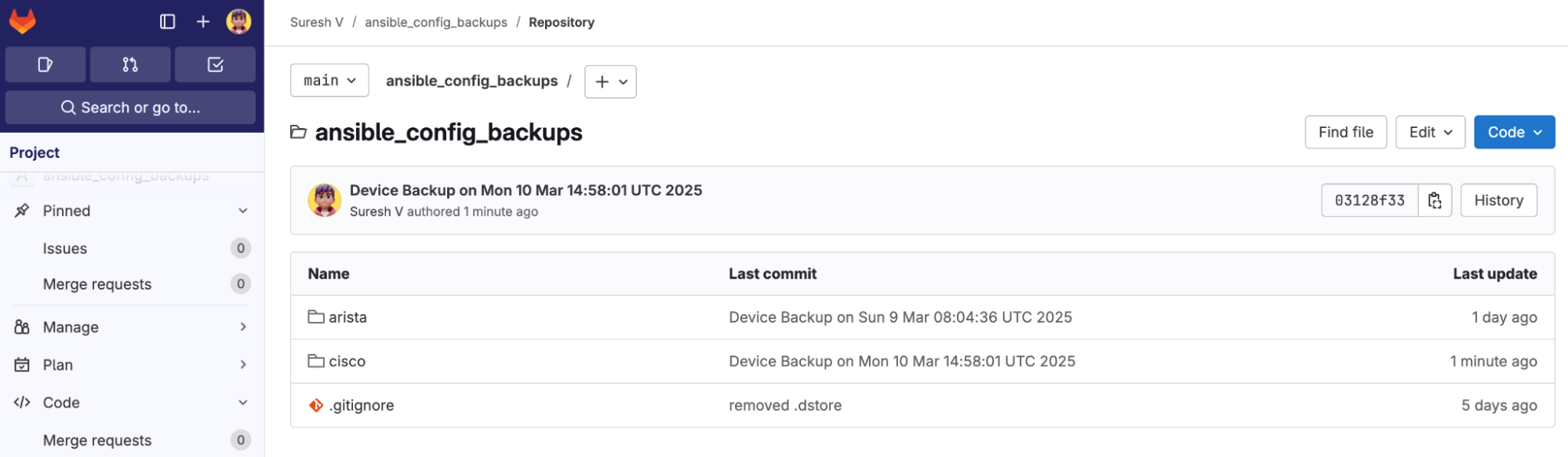

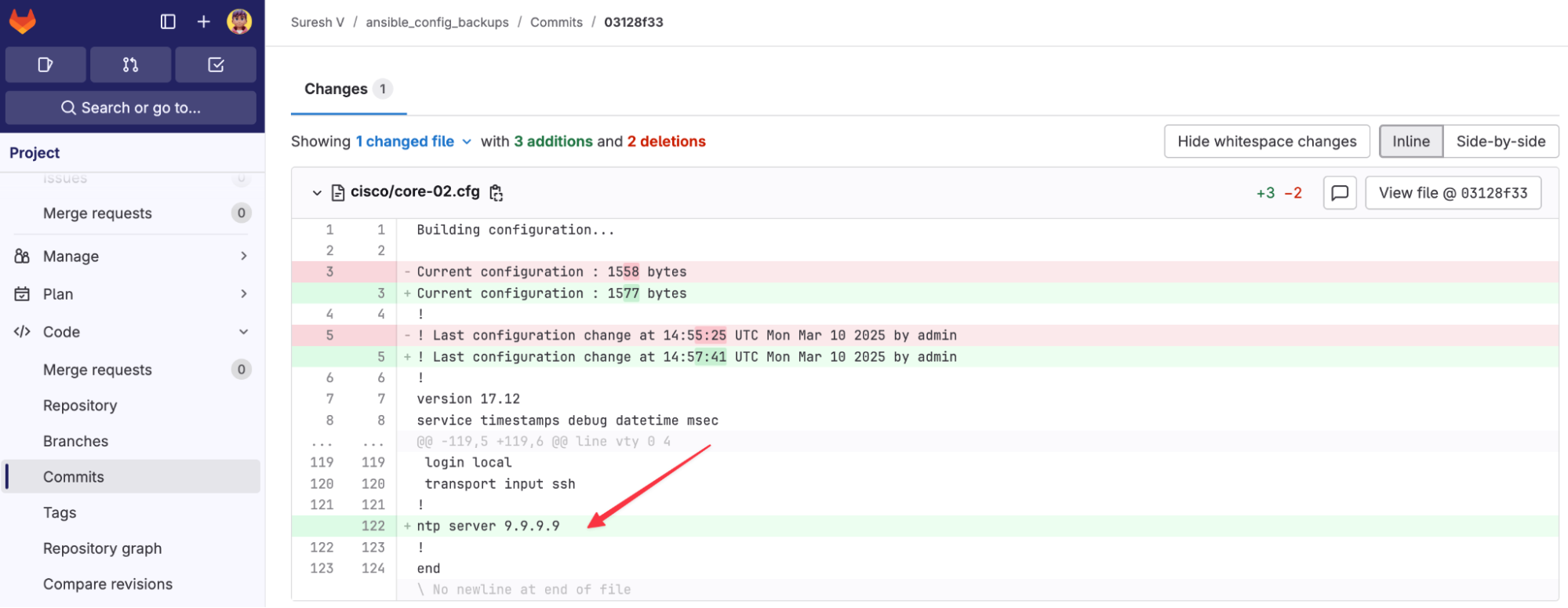

So, when we run the playbook, Ansible will connect to the devices, take a backup, save it locally, and then run the Git commands to push it to GitLab. I took an initial backup of the devices, and now I'm going to make a change to one of the devices (core-02) and re-run the playbook to see how it reflects in the results.

core-02(config)#ntp server 9.9.9.9

core-02(config)#end

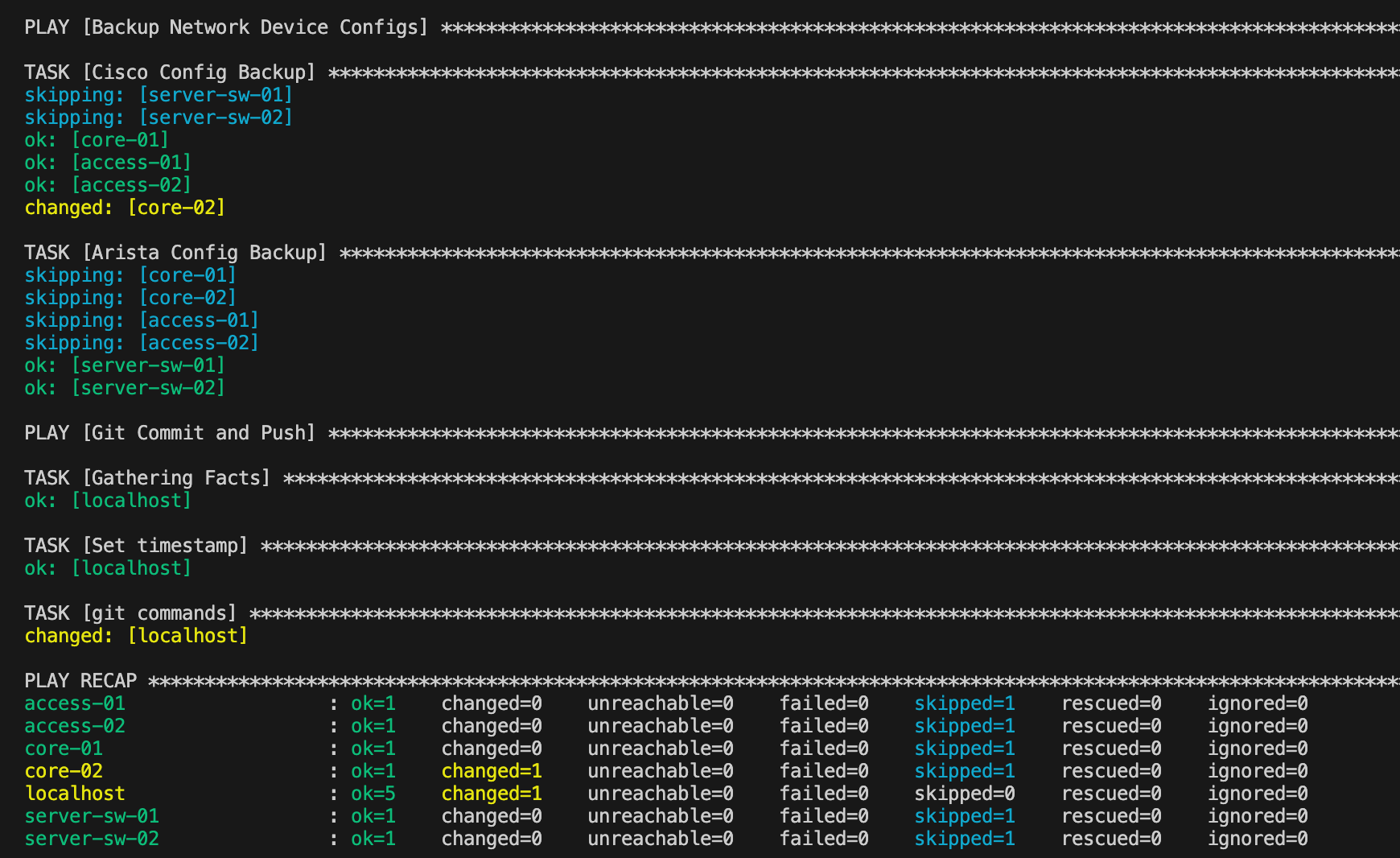

In the PLAY RECAP, we can see that core-02 has changed=1, which means Ansible detected a difference in the device’s configuration and backed up the new version. The other devices show changed=0, meaning their configurations remained the same, so Ansible didn't create a new backup for them.

If we rerun the playbook and there are no changes in the configuration, Git will recognize this and won’t push the same file again. This ensures that only actual changes are tracked, keeping the repository clean and avoiding unnecessary commits.

Cronjob for Scheduled Backups

A cron job is a scheduled task in Linux that runs automatically at specified intervals. To add a new cron job, you can use the crontab -e command and define the schedule in the crontab file.

Since cron jobs don’t necessarily run from the directory we want, and we also need to activate our Python virtual environment (venv), we wrap this inside a Bash script. A virtual environment (venv) in Python is an isolated environment that allows us to install dependencies separately from the system-wide Python installation. In our script, we activate the virtual environment before running the Ansible playbook to ensure it runs with the correct dependencies.

backup_script.sh

#!/usr/bin/bash

source /Users/bob/ansible_code/venv/bin/activate

ANSIBLE_CONFIG=/Users/bob/ansible_code/ansible.cfg ansible-playbook /Users/bob/ansible_code/backup.yml

In the script, we explicitly specified the **Ansible configuration file **in front of the ansible-playbook command. By setting this variable, we avoid any potential issues with missing or incorrect configurations when the script runs automatically.

Finally, you can add a cron job using the crontab -e command. In this case, the job is configured to run every day at 00:15, executing the bash script.

15 00 * * * /Users/bob/ansible_code/backup_script.sh >> /Users/bob/ansible_code/backup.log 2>&1

Make sure to specify the absolute path to the script. Additionally, logs are saved to a log file, so if the job does not run or you have some issues, you can check the log to identify the issue.

Closing Thoughts

I hope this gives you a clear idea of how easily we can set up a simple configuration backup tool in a multi-vendor environment. Git complements this setup by tracking changes and storing backups in a remote repository, ensuring we always have a version history.

If you want to learn more about Ansible or Git, feel free to check out our courses, where we dive deeper into each topic.