Introduction

In our previous posts , we installed the management plane aka VSD, and also the controller aka VSC.

We will now install the VRS. VRS is the virtual switch that replaces OVS within each of the compute nodes. This is achieved via an RPM install on each of the nodes.

Overview

When it comes to installing the VRS there are quite a few steps.

The key thing to remember is,

- that when configuring the controller it requires communication to the VSD,

- when configuring the compute nodes it requires communication to the controller (VSC).

Here's an overview of the steps:

Controller/Network Node

- Disable SELinux.

- Install the Nuage RPMs.

- Create a new user within the VSD.

- Update the various config files (Nova, Neutron ML2).

- CMS-ID generation via communication to the VSD.

- Update Horizon.

- Update DB.

- Restart services.

Compute Node

- Disable SELinux.

- Install VRS.

- Update config files (Metadata and Nova). This will include the controller IPs.

- Restart services.

Once complete we will check on the controller to confirm that the VRS (a single instance in this case) is seen.

Note: the steps above have been created lab use only (i.e steps include disabling SELinux etc).

Let's begin...

Prerequisites

RPMs

Upload the following RPMs to the controller:

- nuage-openstack-horizon-11.0.0-5.3.2_20_nuage.noarch.rpm

- nuage-openstack-neutron-10.0.0-5.3.2_20_nuage.noarch.rpm

- nuage-openstack-neutronclient-6.1.0-5.3.2_20_nuage.noarch.rpm

- nuage-nova-extensions-15.0.0-5.3.2_20_nuage.noarch.rpm

- nuage-openstack-upgrade-5.3.2-20.tar.gz

Upload the following RPMs to the compute:

- nuage-openvswitch-5.3.2-28.el7.x86_64.rpm

- nuage-metadata-agent-*.x86_64.rpm

Crudini

On all nodes install crudini, like so. This small program simply simplifies the process of updating ini files.

easy_install crudini

Disable SELinux

Disable SELinux upon all nodes,

sed -i 's/SELINUX=.*$/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

Controller/Network Node

Update/Disable Service

systemctl stop neutron-dhcp-agent.service

systemctl stop neutron-l3-agent.service

systemctl stop neutron-metadata-agent.service

systemctl stop neutron-openvswitch-agent.service

systemctl stop neutron-netns-cleanup.service

systemctl stop neutron-ovs-cleanup.service

systemctl stop neutron-server.service

systemctl disable neutron-dhcp-agent.service

systemctl disable neutron-l3-agent.service

systemctl disable neutron-metadata-agent.service

systemctl disable neutron-openvswitch-agent.service

systemctl disable neutron-netns-cleanup.service

systemctl disable neutron-ovs-cleanup.service

Install RPMs

rpm -iv nuage-openstack-horizon-11.0.0-5.3.2_20_nuage.noarch.rpm

rpm -iv nuage-openstack-neutron-10.0.0-5.3.2_20_nuage.noarch.rpm

rpm -iv nuage-openstack-neutronclient-6.1.0-5.3.2_20_nuage.noarch.rpm

rpm -iv nuage-nova-extensions-15.0.0-5.3.2_20_nuage.noarch.rpm

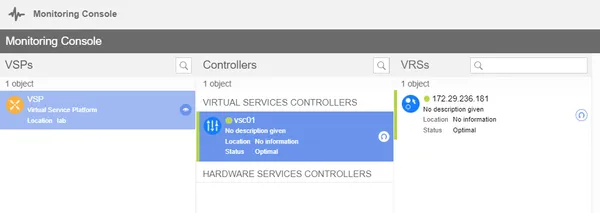

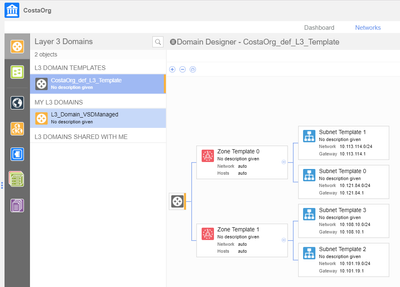

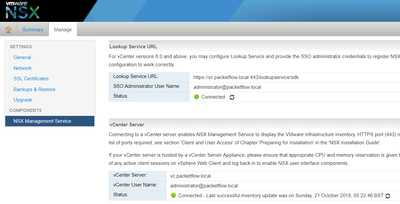

Create VSD User

Create a new user within the VSD. Then add the user to the CMS group and Root group with the credentials of cmsuser:cmsuser.

Figure 1: Create VSD user.

Update Config Files

Neutron

NEUTRON_CONF=/etc/neutron/neutron.conf

crudini --set "${NEUTRON_CONF}" DEFAULT service_plugins "NuageL3, NuageAPI, NuagePortAttributes"

crudini --set "${NEUTRON_CONF}" DEFAULT core_plugin "ml2"

ML2

ML2_INI=/etc/neutron/plugins/ml2/ml2_conf.ini

crudini --set "${ML2_INI}" ml2 mechanism_drivers nuage

crudini --set "${ML2_INI}" ml2 extension_drivers "nuage_subnet, nuage_port, port_security"

Nuage Plugin

VSD_IP=172.29.236.184

mkdir -p /etc/neutron/plugins/nuage/

rm -rf /etc/neutron/plugin.ini

ln -s /etc/neutron/plugins/nuage/nuage_plugin.ini /etc/neutron/plugin.ini

echo "

[RESTPROXY]

# Desired Name of VSD Organization/Enterprise to use when net-partition

# is not specified

default_net_partition_name = OpenStack_default

# Hostname or IP address and port for connection to VSD server

server = $VSD_IP:8443

# VSD Username and password for OpenStack plugin connection

# User must belong to CSP Root group and CSP CMS group

serverauth = cmsuser:cmsuser

nuage_fip_underlay = True

### Do not change the below options for standard installs

organization = csp

auth_resource = /me

serverssl = True

base_uri = /nuage/api/v5_0

cms_id =

[PLUGIN]

default_allow_non_ip = True" > /etc/neutron/plugins/nuage/nuage_plugin.ini

Nova

NOVA_CONF=/etc/nova/nova.conf

crudini --set "${NOVA_CONF}" DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

crudini --set "${NOVA_CONF}" DEFAULT use_neutron True

crudini --set "${NOVA_CONF}" neutron ovs_bridge alubr0

crudini --set "${NOVA_CONF}" libvirt vif_driver nova.virt.libvirt.vif.LibvirtGenericVIFDriver

Horizon

ALIAS_UPDATE='Alias /dashboard/static/nuage "/usr/lib/python2.7/site-packages/nuage_horizon/static"'

sed -i "s|Alias declarations.*DocumentRoot|&\n $ALIAS_UPDATE|g" /etc/httpd/conf.d/15-horizon_vhost.conf

sed -i "s/HORIZON_CONFIG = {/&\n\ 'customization_module\'\: \'nuage_horizon.customization\'\,/g" \

/usr/share/openstack-dashboard/openstack_dashboard/local/local_settings.py

sed '/Directory>/r'<(

echo " <Directory \"/usr/lib/python2.7/site-packages/nuage_horizon\">"

echo " Options FollowSymLinks"

echo " AllowOverride None"

echo " Require all granted"

echo " </Directory>"

) -i -- /etc/httpd/conf.d/15-horizon_vhost.conf

Create CMS ID

mkdir -p openstack-upgrade

tar xzf nuage-openstack-upgrade-5.3.2-20.tar.gz -C openstack-upgrade/

cd openstack-upgrade

python generate_cms_id.py --config-file /etc/neutron/plugin.ini

Note: Once this step is completed you will see the CMD_ID, previously empty within the Nuage Plugin file we created a few steps back, will now be populated.

Update Neutron DB

neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/nuage/nuage_plugin.ini \

upgrade head

Restart Services

NEUTRON_SERVICE_SERVICE=/usr/lib/systemd/system/neutron-server.service

crudini --set "${NEUTRON_SERVICE_SERVICE}" Service ExecStart "/usr/bin/neutron-server --config-file /usr/share/neutron/neutron-dist.conf --config-dir /usr/share/neutron/server --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugin.ini --config-file /etc/neutron/plugins/ml2/ml2_conf.ini --config-dir /etc/neutron/conf.d/common --config-dir /etc/neutron/conf.d/neutron-server --log-file /var/log/neutron/server.log"

service openstack-nova-api restart

service openstack-nova-cert restart

service openstack-nova-consoleauth restart

service openstack-nova-scheduler restart

service openstack-nova-conductor restart

service openstack-nova-novncproxy restart

service httpd restart

service neutron-server restart

Compute Node

Let's move onto the compute node.

Install Dependencies

rpm -iv http://dl.fedoraproject.org/pub/epel/7/x86_64/Packages/v/vconfig-1.9-16.el7.x86_64.rpm

yum install python-twisted-core perl-JSON libvirt qemu-kvm -y

Remove OVS/Install VRS

rpm -e --nodeps openvswitch-2.9.0-3.el7.x86_64

yum localinstall nuage-openvswitch-5.3.2-28.el7.x86_64.rpm -y

service openvswitch restart

ovs-vsctl show

Configure VRS

VSC1_IP=172.29.236.186

VSC2_IP=

OVS_CONF=/etc/default/openvswitch

sed -i 's/PERSONALITY.*/PERSONALITY=vrs/g' $OVS_CONF

sed -i "s/^.ACTIVE_CONTROLLER=.*/ACTIVE_CONTROLLER=${VSC1_IP}/g" $OVS_CONF

sed -i "s/^.STANDBY_CONTROLLER=.*/STANDBY_CONTROLLER=${VSC2_IP}/g" $OVS_CONF

Configure Nova

NOVA_CONF=/etc/nova/nova.conf

crudini --set "${NOVA_CONF}" DEFAULT Network_api_class nova.network.neutronv2.api.API

crudini --set "${NOVA_CONF}" DEFAULT Libvirt_vif_driver nova.virt.libvirt.vif.LibvirtGenericVIFDriver

crudini --set "${NOVA_CONF}" DEFAULT Security_group_api neutron

crudini --set "${NOVA_CONF}" DEFAULT Firewall_driver nova.virt.firewall.NoopFirewallDriver

crudini --set "${NOVA_CONF}" neutron ovs_bridge alubr0

Install/Configure MetaAgent

In order to obtain the Nova password for this step, go onto the controller and run crudini --get /etc/nova/nova.conf keystone_authtoken password.

yum install python-novaclient python-httplib2 -y

rpm -vi nuage-metadata-agent-*.x86_64.rpm

NOVA_OS_PW=<NOVA_PW>

OS_CONTROLLER_IP=172.29.236.180

echo "

METADATA_PORT=9697

NOVA_METADATA_IP=${OS_CONTROLLER}

NOVA_METADATA_PORT=8775

METADATA_PROXY_SHARED_SECRET="NuageNetworksSharedSecret"

NOVA_CLIENT_VERSION=2

NOVA_OS_USERNAME=nova

NOVA_OS_PASSWORD=${NOVA_OS_PW}

NOVA_OS_TENANT_NAME=admin

NOVA_OS_AUTH_URL=http://${OS_CONTROLLER}:5000/v3

NUAGE_METADATA_AGENT_START_WITH_OVS=true

#NOVA_REGION_NAME=regionOne

NOVA_API_ENDPOINT_TYPE=publicURL

NOVA_PROJECT_NAME=services

NOVA_USER_DOMAIN_NAME=default

NOVA_PROJECT_DOMAIN_NAME=default

IDENTITY_URL_VERSION=3

NOVA_OS_KEYSTONE_USERNAME=nova

" > /etc/default/nuage-metadata-agent

Restart Services

service openstack-nova-compute restart

Verify

Now that both the controller and compute node have been configured, we will verify each of the components can see each other.

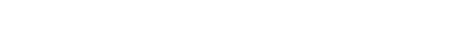

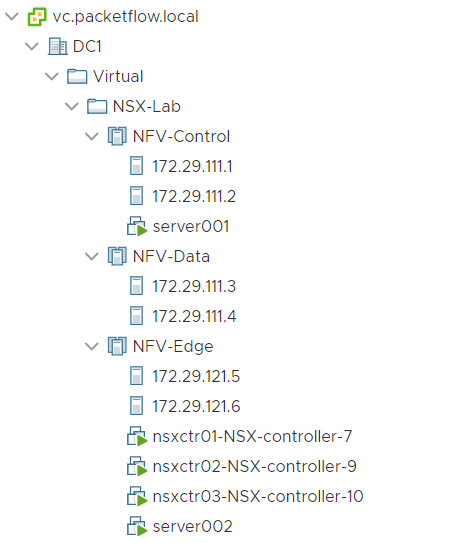

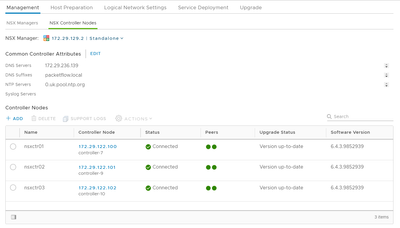

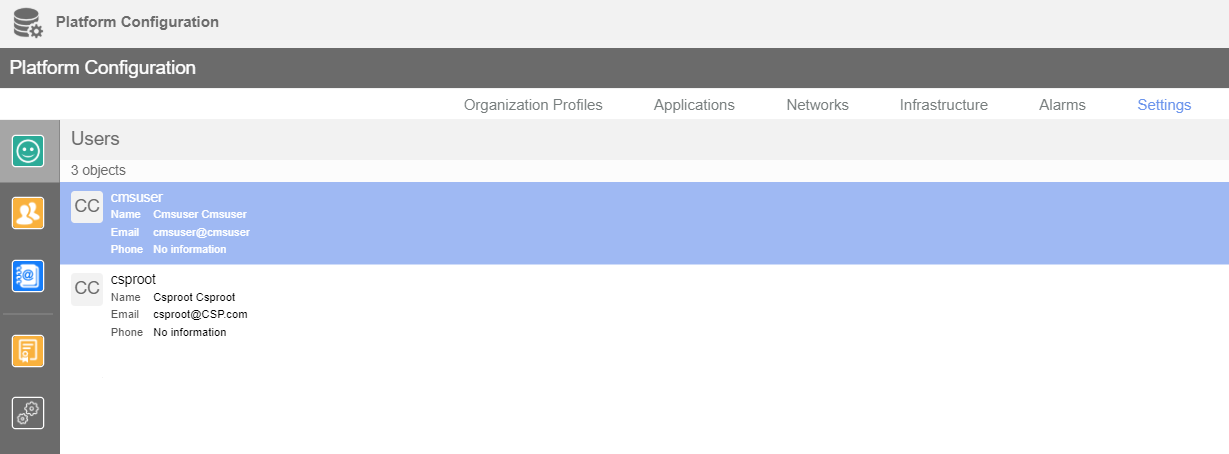

Via VSD

To validate via the VSD, goto the monitoring console. From there you will be to see the status controller and VRS.

Figure 2: Verify via VSD

Via VSC

To confirm connectivity from your VSC to VRS you can run the following command:

*A:vsc01# show vswitch-controller vswitches

===============================================================================

VSwitch Table

===============================================================================

-------------------------------------------------------------------------------

Legend: * -> Primary Controller ! -> NSG in Graceful Restart

-------------------------------------------------------------------------------

vswitch-instance System-Id Personality Uptime Num

VM/Host/Bridge/

Cont

Num Resolved

-------------------------------------------------------------------------------

*va-172.29.236.181* n/a VRS 0d 00:03:31 0/0/0/0

0/0/0/0

Outro

Well, that concludes this 3 part Nuage install series. I hope you`ve had fun and stay tuned for future Nuage tutorials.