Introduction

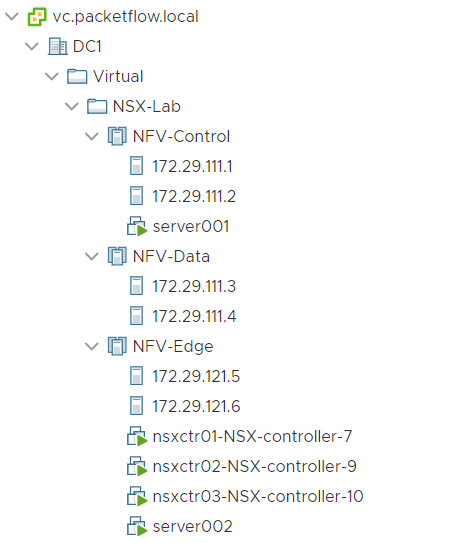

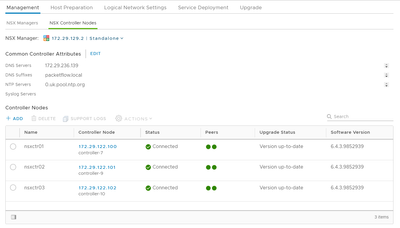

The controller cluster in the NSX platform is the control plane component responsible for managing the hypervisor switching and routing modules. This includes the logical switches and VXLAN overlay. Due to the centralized, decoupled model of the controller, it provides the ability to reduce broadcast traffic, in turn negating the need to configure multicast within the underlay.

Controller to ESXi Communication

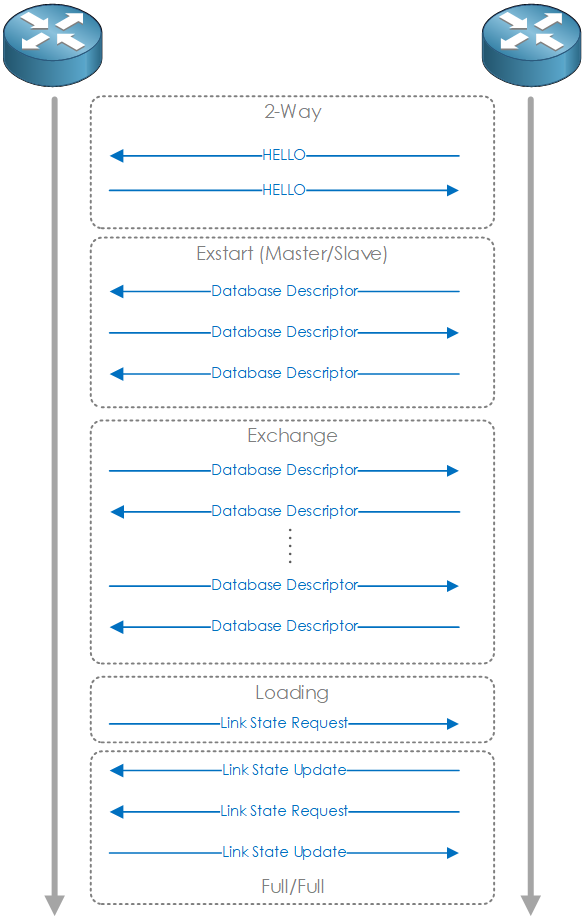

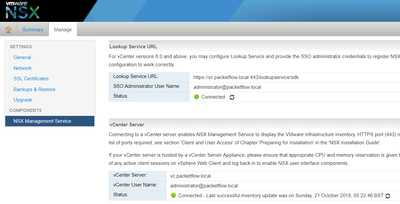

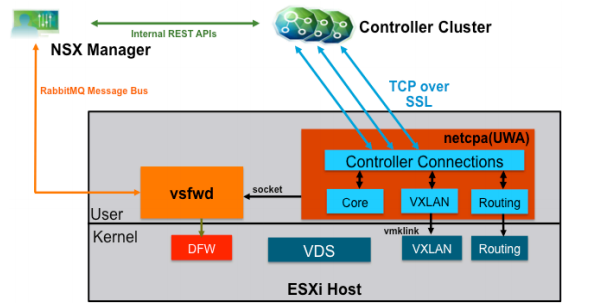

Communication between the NSX Controller and ESXi hosts is performed by the User World Agent (UWA). This agent runs on the hosts as a daemon called netcpa. Furthermore, UWA is not used to manage the distributed firewall, instead, the vswd daemon is used for communication directly between the host and NSX manager (shown in Figure1).

Figure 1 - NSX Controller / ESXi components.[1]

Tables

MAC Table

The MAC table contains the MAC addresses learned behind each VTEP.

This table is populated via the use of the User World Agent (UWA). The UWA runs upon each host, at the point, a new VM is started, UWA obtains the MAC address and the corresponding VTEP and informs the NSX Controller. This process is followed by all hosts/VMs.

ARP Table

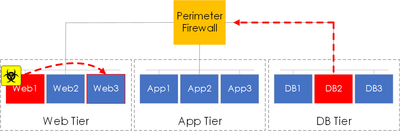

The ARP table is used to suppress broadcast traffic.

At the point a VM boots up, and starts to send traffic, the local VTEP builds the IP to MAC address mapping. The VTEP then sends this information to NSX Controller.

This greatly helps reduce broadcast traffic, as when a VM sends an ARP request (broadcast) the VTEP intercepts the request and forwards it to the NSX controller. The NSX controller then responds to the request, which the host caches and passes onto the VM. Therefore preventing all hosts from receiving the ARP (request) broadcast.

VTEP Table

The VTEP table tracks all of the VTEPs IPs, and VTEP MAC addresses via VNI,

How this works, is that the first time any VM connects to a logical switch, the ESXi host updates its local VTEP and then sends a report to the NSX controller with this information.

The NSX controller then pushes out the VTEP table to all hosts for the relating VNI via the UWA.

Cluster Slicing

NSX Controller nodes must be deployed within a cluster of three. Furthermore, a master node is elected for each role. The master node then assigns workloads to the other nodes.

The workloads are split into slices and spread across each of the nodes. At the point of there being a cluster failure, the slices are redistributed.

This renders all the controller nodes as Active at all times. If one controller node fails, then the other nodes have reassigned the tasks that were owned by the failed node to ensure operational status.[2]

References

"VMware NSX Network Virtualization Design Guide." https://www.vmware.com/files/kr/pdf/products/nsx/vmw-nsx-network-virtualization-design-guide.pdf. Accessed 5 Apr. 2018. ↩︎

"NSX Controller clusters - Learning VMware NSX." Accessed 5 Apr. 2018. ↩︎